Databricks agent¶

This guide provides an overview of how to set up Databricks agent in your Flyte deployment.

Spin up a cluster¶

You can spin up a demo cluster using the following command:

flytectl demo start

Or install Flyte using the flyte-binary helm chart.

If you’ve installed Flyte using the flyte-core helm chart, please ensure:

You have the correct kubeconfig and have selected the correct Kubernetes context.

You have configured the correct flytectl settings in

~/.flyte/config.yaml.

Note

Add the Flyte chart repo to Helm if you’re installing via the Helm charts.

helm repo add flyteorg https://flyteorg.github.io/flyte

Databricks workspace¶

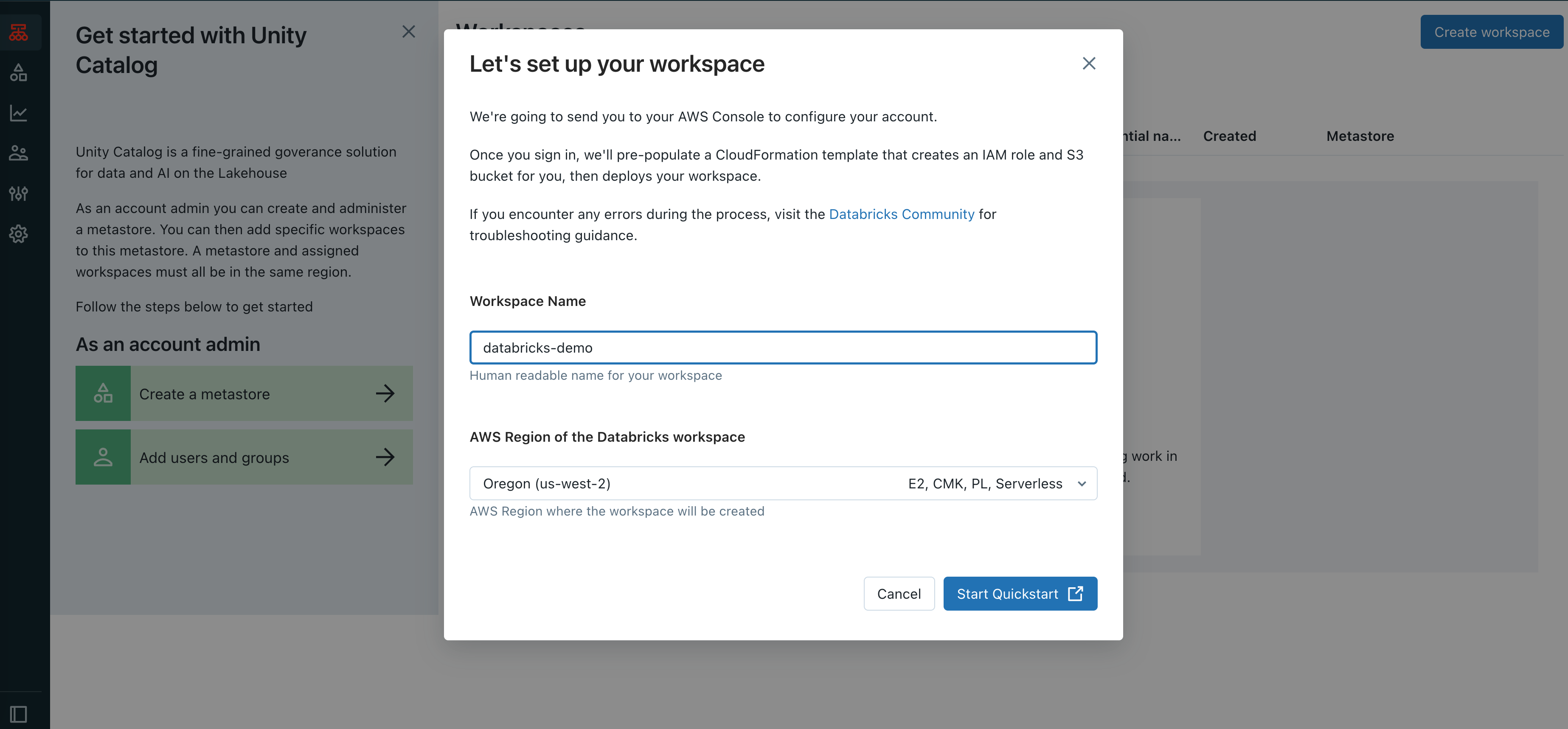

To set up your Databricks account, follow these steps:

Create a Databricks account.

Ensure that you have a Databricks workspace up and running.

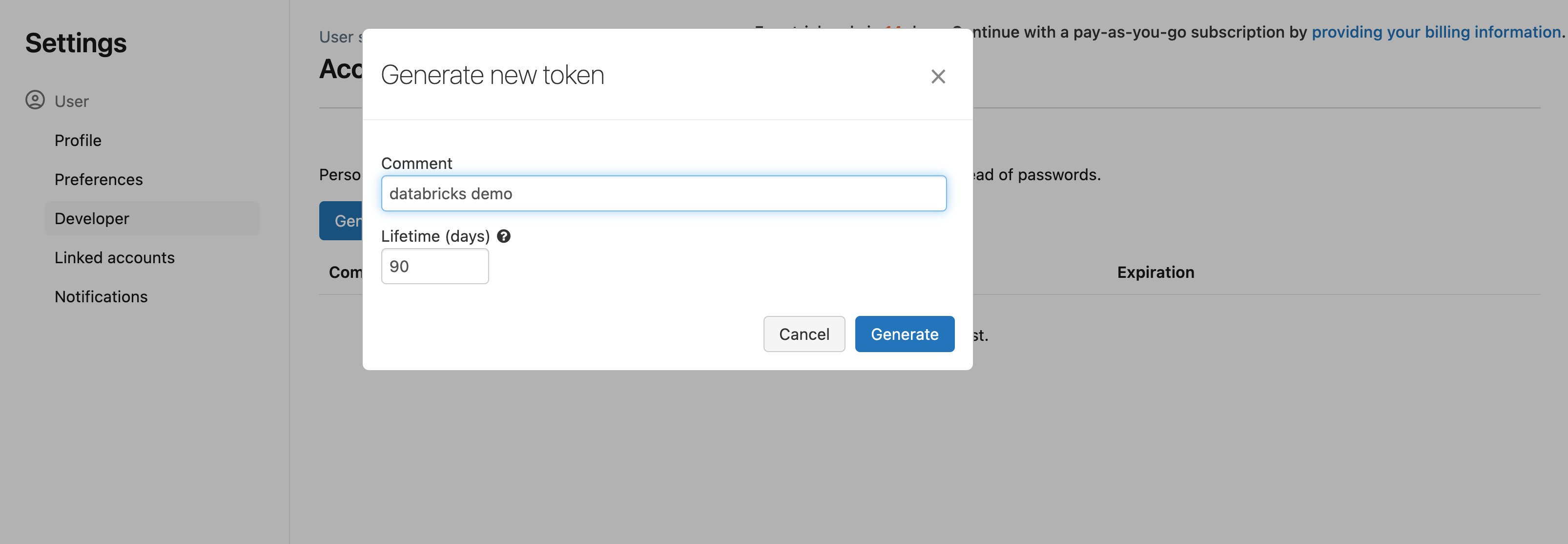

Generate a personal access token to be used in the Flyte configuration. You can find the personal access token in the user settings within the workspace.

User settings->Developer->Access tokens

Enable custom containers on your Databricks cluster before you trigger the workflow.

curl -X PATCH -n -H "Authorization: Bearer <your-personal-access-token>" \

https://<databricks-instance>/api/2.0/workspace-conf \

-d '{"enableDcs": "true"}'

For more detail, check custom containers.

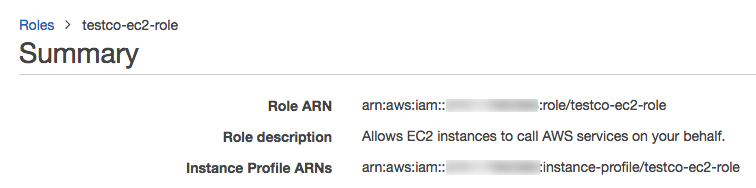

5. Create an instance profile for the Spark cluster. This profile enables the Spark job to access your data in the S3 bucket.

Create an instance profile using the AWS console (For AWS Users)¶

In the AWS console, go to the IAM service.

Click the Roles tab in the sidebar.

Click Create role.

Under Trusted entity type, select AWS service.

Under Use case, select EC2.

Click Next.

At the bottom of the page, click Next.

In the Role name field, type a role name.

Click Create role.

In the role list, click the AmazonS3FullAccess role.

Click Create role button.

In the role summary, copy the Role ARN.

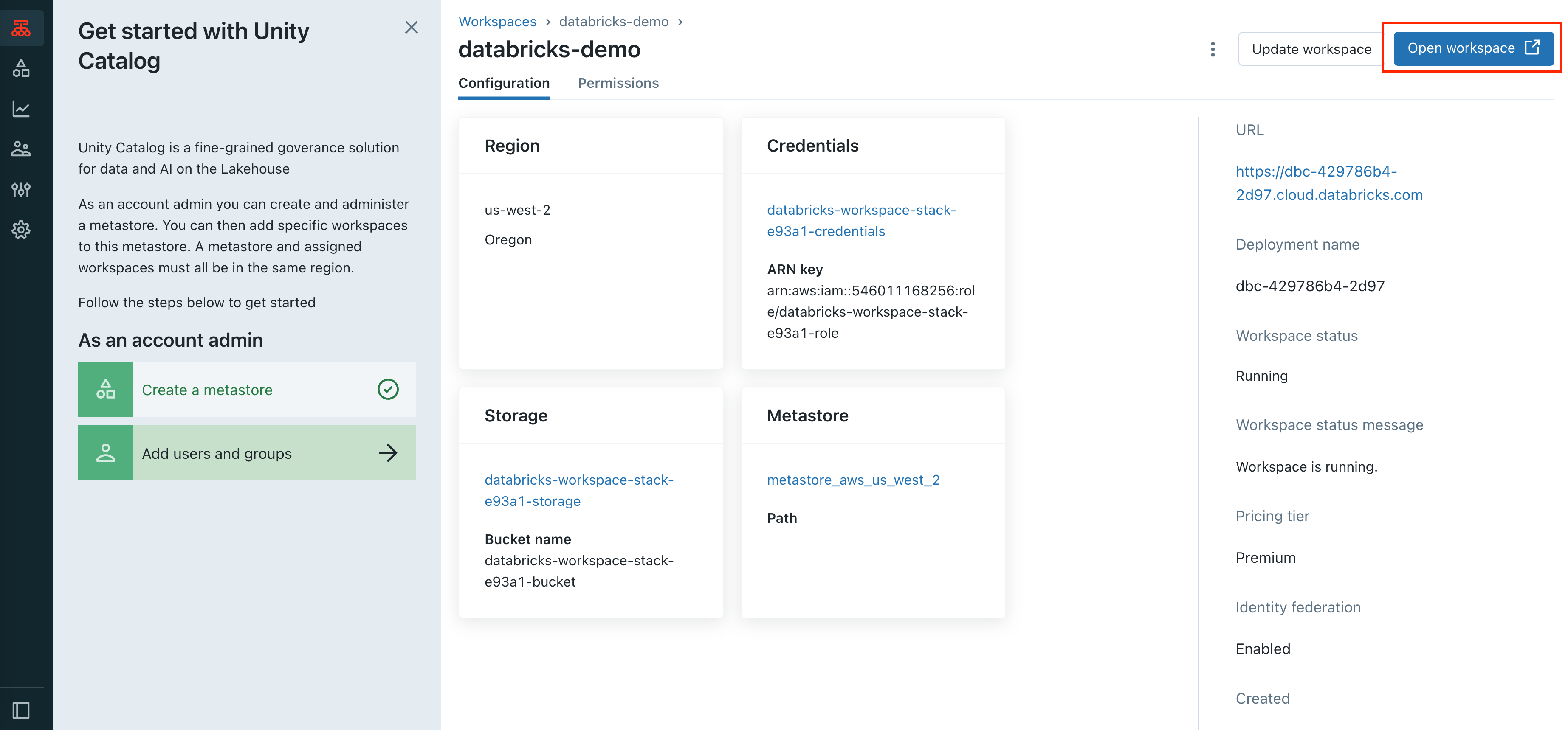

Locate the IAM role that created the Databricks deployment¶

If you don’t know which IAM role created the Databricks deployment, do the following:

As an account admin, log in to the account console.

Go to

Workspacesand click your workspace name.In the Credentials box, note the role name at the end of the Role ARN

For example, in the Role ARN arn:aws:iam::123456789123:role/finance-prod, the role name is finance-prod

Edit the IAM role that created the Databricks deployment¶

In the AWS console, go to the IAM service.

Click the Roles tab in the sidebar.

Click the role that created the Databricks deployment.

On the Permissions tab, click the policy.

Click Edit Policy.

Append the following block to the end of the Statement array. Ensure that you don’t overwrite any of the existing policy. Replace <iam-role-for-s3-access> with the role you created in Configure S3 access with instance profiles.

{

"Effect": "Allow",

"Action": "iam:PassRole",

"Resource": "arn:aws:iam::<aws-account-id-databricks>:role/<iam-role-for-s3-access>"

}

Specify agent configuration¶

Enable the Databricks agent on the demo cluster by updating the ConfigMap:

kubectl edit configmap flyte-sandbox-config -n flyte

tasks:

task-plugins:

default-for-task-types:

container: container

container_array: k8s-array

sidecar: sidecar

spark: agent-service

enabled-plugins:

- container

- sidecar

- k8s-array

- agent-service

plugins:

agent-service:

supportedTaskTypes:

- spark

Edit the relevant YAML file to specify the plugin.

tasks:

task-plugins:

enabled-plugins:

- container

- sidecar

- k8s-array

- agent-service

default-for-task-types:

- container: container

- container_array: k8s-array

- spark: agent-service

plugins:

agent-service:

supportedTaskTypes:

- spark

Create a file named values-override.yaml and add the following config to it:

enabled_plugins:

tasks:

task-plugins:

enabled-plugins:

- container

- sidecar

- k8s-array

- agent-service

default-for-task-types:

container: container

sidecar: sidecar

container_array: k8s-array

spark: agent-service

plugins:

agent-service:

supportedTaskTypes:

- spark

Add the Databricks access token¶

You have to set the Databricks token to the Flyte configuration.

Install flyteagent pod using helm

helm repo add flyteorg https://flyteorg.github.io/flyte

helm install flyteagent flyteorg/flyteagent --namespace flyte

Get the base64 value of your Databricks token.

echo -n "<DATABRICKS_TOKEN>" | base64

Edit the flyteagent secret

kubectl edit secret flyteagent -n flyte

apiVersion: v1 data: flyte_databricks_access_token: <BASE64_ENCODED_DATABRICKS_TOKEN> kind: Secret metadata: annotations: meta.helm.sh/release-name: flyteagent meta.helm.sh/release-namespace: flyte creationTimestamp: "2023-10-04T04:09:03Z" labels: app.kubernetes.io/managed-by: Helm name: flyteagent namespace: flyte resourceVersion: "753" uid: 5ac1e1b6-2a4c-4e26-9001-d4ba72c39e54 type: Opaque

Upgrade the deployment¶

kubectl rollout restart deployment flyte-sandbox -n flyte

helm upgrade <RELEASE_NAME> flyteorg/flyte-binary -n <YOUR_NAMESPACE> --values <YOUR_YAML_FILE>

Replace <RELEASE_NAME> with the name of your release (e.g., flyte-backend),

<YOUR_NAMESPACE> with the name of your namespace (e.g., flyte),

and <YOUR_YAML_FILE> with the name of your YAML file.

helm upgrade <RELEASE_NAME> flyte/flyte-core -n <YOUR_NAMESPACE> --values values-override.yaml

Replace <RELEASE_NAME> with the name of your release (e.g., flyte)

and <YOUR_NAMESPACE> with the name of your namespace (e.g., flyte).

Wait for the upgrade to complete. You can check the status of the deployment pods by running the following command:

kubectl get pods -n flyte

For Databricks agent on the Flyte cluster, see Databricks agent.